Sector Deep Dive #1: REINFORCEMENT LEARNING

Companies that build and sell Reinforcement Learning products

1. The big picture you might not expect

Reinforcement Learning (RL) is often treated like a moonshot: amazing demos but not very dependable in production. But the past few years tell a different story. There have been a handful of very practical deployments and a maturing toolchain around simulators, data, and post-training. And they’re quietly turning RL into a repeatable product category for operations, robotics, and model alignment.

Here are the unexpected parts that matter over the next 12–24 months:

RL has already paid real, measurable bills in production. DeepMind’s control system for Google data centers cut cooling energy by up to 40% when first deployed on human-in-the-loop settings in July 2016. And from August 2018, Google ran a fully autonomous controller that delivered ongoing energy savings across multiple sites. Both deployments were publicly documented by the teams involved, which is rare for infra case studies.

Instruction-following AI exists because RL works at scale. OpenAI’s InstructGPT paper (posted March 4, 2022) showed human evaluators preferred outputs from a 1.3B parameter RLHF-tuned model over the original 175B GPT-3. That single result is why almost every modern model stack includes RLHF (Reinforcement Learning with Human Feedback) or a cousin such as DPO (Direct Preference Optimization) / GRPO (Group Relative Policy Optimization) in production alignment.

Simulation is the hidden kingmaker. Microsoft’s Project Bonsai and Siemens demonstrated >30x faster CNC auto-calibration in May 2018, with a domain expert (not an ML specialist) building the agent. Today Bonsai plugs into MathWorks Simulink and AnyLogic, letting teams train safely in simulation and ship to factory lines with fewer surprises.

Grocery and 3PL logistics are RL’s commercial wedge. Ocado Group bought Kindred Systems and Haddington Dynamics for $262M and $25M in November 2020, explicitly citing deep RL in Kindred’s picking approach. Covariant is live with 3PL Radial, and Symbotic expanded its Walmart partnership on January 16, 2025 by acquiring Walmart’s robotics unit for $200M and securing a $520M development program. This is evidence that large retailers will keep writing large checks for autonomy that improves unit economics.

Education-to-enterprise funnels are changing shape. AWS is retiring the centrally hosted DeepRacer League after 2024. The service remains in console through December 2025 and transitions to an AWS Solution you can run in your own account. Expect “inside-the-enterprise” leagues connected to internal simulators and proprietary data.

The product stack is global. The most credible RL product proof points span the US (Microsoft, AWS, Covariant, Symbotic), UK (DeepMind, Ocado), Germany (BioNTech’s January 2023 acquisition of InstaDeep), and China (Baidu’s RL work in robotics and autonomy). This matters because buyers often prefer local support and local compliance expertise for factory deployments.

Capital markets haven’t given up on RL. JPMorgan’s LOXM project (publicly discussed 2017–2018) used policy learning for execution with guardrails and supervision. Expect more “bandits + rules + audits” than end-to-end black-box RL in finance.

2. Where RL is actually delivering value (and why it’s defensible)

Industrial control / energy efficiency. Datacenter cooling is a canonical example because the objectives are simple (keep PUE low, avoid thermal excursions) but the dynamics are messy. DeepMind showed up to 40% cooling energy reduction (2016) followed by an autonomous controller saving roughly 30% across multiple Google sites (2018). This shows RL can run 24/7 provided you bound the action space and keep human oversight for safety. The buyer value here is repeatable OPEX savings, not marginal accuracy points.

Precision calibration and tuning. Siemens and Microsoft’s Project Bonsai demonstrated a >30x speed-up for CNC calibration in 2018. One axis calibrated in ~13 seconds while matching expert precision, and the system was built by a subject-matter expert using “machine teaching” rather than a research team writing algorithms. Longevity matters: Bonsai is now integrated with Simulink and AnyLogic, making sim-to-plant workflows more accessible to control engineers.

Warehouses and last-mile retail logistics. Logistics-grade picking demands adaptable perception and control. Kindred (now part of Ocado) leaned on deep RL for dexterous piece-picking. Ocado keeps emphasizing RL across its “on-grid pick” automation. Covariant landed deployments with Radial (announced February 10, 2023). Symbotic posted $1.822B FY-2024 revenue and deepened Walmart ties in January 2025, adding hundreds of accelerated pickup & delivery centers (APDs) to its roadmap. This is an ecosystem signal that warehouses will standardize on platforms where RL can be an embedded component.

Biotech and complex optimization. BioNTech agreed to acquire InstaDeep in January 2023 (deal up to ~€562M). While much of the public narrative focused on discovery, the near-term operational wins are often in scheduling, experiment design, and supply-chain optimization. These are classic RL-friendly problems with constrained action spaces and strong simulators.

Quant/execution. JPMorgan’s LOXM (first reported July 2017) used RL concepts for execution improvement. The design takeaway for startups is not “ship end-to-end RL”, but wrap RL with supervision, audit logs, and rule-based safety. That’s how you pass risk committees.

3. Who buys, why they sign, and what convinces them

Plant managers and control engineers buy when you can prove bounded exploration (no runaway actuators) and short time-to-value. The Siemens + Bonsai result lands because it collapsed calibration time to seconds on some axes without sacrificing precision. And did so with a domain expert building the agent, not a research lab parachuting in. That makes RL feel like a tool, not a science project.

Logistics and e-commerce operations leaders buy steadier throughput and fewer mis-picks that integrate cleanly with WMS/ERP. Kindred’s history with Gap and American Eagle pre-acquisition showed real merchant tolerance for RL-powered picking. Covariant’s Radial rollout demonstrates that 3PLs (who standardize across many sites) are willing to pick a platform and expand. Symbotic’s Walmart expansion underscores that once a retailer standardizes, follow-on scope (like APDs) can be large and fast.

CIO/CTO buyers in regulated industries will ask for verification (what happens under edge cases?), observability (what did the policy see and do?), and roll-back (how do we safely disable and revert?). Vendors need to bundle formal verification or “safe RL” claims with simulator-backed testing. Something like NVIDIA Isaac Sim for robotics or AnyLogic for discrete-event systems. These vendors get a smoother reception when pilots transition to production.

Data science and LLM platform teams buy RLHF-style post-training to make models useful to end users. The InstructGPT result (1.3B beating 175B on human preference) remains a watershed that budget owners still cite when defending RLHF spend.

4. The product stack behind successful RL deployments

Simulators and digital twins. You don’t let a learning controller “trial-and-error” on a live kiln or warehouse unless it’s practiced extensively in a high-fidelity simulator. That’s why connectors and toolchains matter:

MathWorks Simulink + Microsoft Project Bonsai (announced May 19, 2020) allows control engineers to reuse existing Simulink models as training environments.

AnyLogic + Project Bonsai (announced July 14, 2020) supplies an official connector and wrapper for quick simulator hookups, which is good for factories and logistics networks modeled in discrete-event or agent-based styles.

NVIDIA Isaac Sim provides physics-accurate robot simulation to train and test RL policies before touching the real arm.

Foundational RL libraries:

Ray RLlib (Anyscale) remains a widely used distributed RL library that “just works” at cluster scale.

Unity ML-Agents bridges game-quality 3D simulation with RL for robotics and control.

Gymnasium (community successor to OpenAI Gym) standardized the environment API used across the ecosystem.

TF-Agents (Google’s TensorFlow team) is still useful where TensorFlow is entrenched.

Intel Coach is an older but illustrative example of chip-vendor RL tooling from Intel’s AI lab.

Cloud RL services:

Microsoft Project Bonsai (Bonsai acquired June 2018, public preview May 2020) focuses on “machine teaching” for subject-matter experts and integrates with leading simulators.

AWS SageMaker RL (announced November 28, 2018) offers managed RL containers and RLEstimator. It integrates toolkits like RLlib and Intel Coach and supports commercial/custom environments.

5. Libraries and frameworks: how to choose (fast)

Teams keep asking the same question: “Which RL/RLHF stack do we pick, and when?” Here’s a practical guide grounded in currently maintained projects and their stated capabilities:

Need something quick that fits Hugging Face? → Use TRL.

Hugging Face’s TRL library has ready-made trainers (PPO, DPO, GRPO) and copy-paste examples that work with the HF model ecosystem. It’s the fastest way to get an RLHF loop running without standing up lots of infra.Training very large models, from one GPU up to massive clusters? → Use NVIDIA NeMo-RL or ByteDance’s verl.

NeMo-RL targets production-scale RLHF for LLMs (100B-class model claims in docs/marketing) and integrates with NeMo’s distributed training stack. verl (from ByteDance/Volcengine) is an open-source RLHF system designed for speed and scale. If you’re already in NVIDIA land, NeMo-RL is the natural fit. If you want a lean OSS stack that scales, verl is a strong option.Already run a Megatron/vLLM/Ray-style cluster and want a full RLHF setup? → Use Alibaba ROLL or Zhipu/THUDM SLiME.

ROLL (Alibaba) focuses on high-throughput RLHF for big GPU fleets. SLiME (THUDM/Zhipu AI ecosystem) explicitly connects Megatron training with SGLang serving for scaled RLHF. Both target production post-training on large clusters. (If you’re deeply on Ray/vLLM, OpenRLHF is also built exactly for that combo.)Training across many separate or partly untrusted machines? → Use prime-rl.

prime-rl is a fully asynchronous, distributed RL/RLHF system designed for flaky or heterogeneous clusters. Its authors used it to train the INTELLECT-2 model. If your infra looks like a federation of nodes rather than a tidy HPC cluster, this is built for you.Want RL for tool-using chatbots/agents right now? → Try SkyRL or OpenPipe/ART.

SkyRL (Sky Computing/UC Berkeley contributors) includes an “agent gym” for long-horizon tool use and evaluation. ART (from OpenPipe) focuses on reliable GRPO training for agents, with practical recipes rather than a heavy platform. These are aimed squarely at agentic tasks, not only static benchmarks.Mostly doing instruction-tuning (not full RL)? → Use AI2’s Open-Instruct.

Open-Instruct from the Allen Institute (AI2) is a clean, simple codebase for instruction/post-training pipelines. It’s great when you don’t need RL loops.Just want the core DPO/PPO bits in plain PyTorch? → Use torchtune.

torchtune (Meta) ships PyTorch-native recipes and losses for PPO, DPO, and GRPO without the extra layers of large frameworks. This is useful for teams that prefer minimal abstractions.Need GRPO plus built-in environments and eval tools? → Use willccbb/verifiers.

verifiers is a modular GRPO/DPO training/eval toolkit that works with Hugging Face’s Trainer and can plug into other stacks like prime-rl. Good for standing up an end-to-end loop with credible evaluation.Reproducing frontier “reasoning” agents (e.g. R1/O-series-style research)? → Use agentica-project/rLLM.

rLLM is an academic, all-in-one framework to train LLM agents with RL, maintained by the Agentica Project (with UC Berkeley/Sky Computing involvement). Choose this when you need research faithfulness and multi-env support more than a polished enterprise UX.

Two closing notes on the framework landscape: (a) you can mix and match e.g. simulate in Isaac Sim or AnyLogic, train with NeMo-RL or ROLL, align with TRL or torchtune, and serve with vLLM/SGLang (b) expect consolidation: winners will be those that meet infra teams where they are (Kubernetes, Slurm, on-prem GPU pools) and play nice with existing observability/logging.

6. The companies and ecosystems you’ll keep hearing about

Cloud platforms and labs:

Microsoft (Project Bonsai): Deep integrations with Simulink and AnyLogic keep it attractive for industrial control.

AWS: SageMaker RL (since 2018) and the DeepRacer education funnel (league ending 2025, service available through December 2025, new AWS Solution form).

Google DeepMind: The data-center cooling results (2016/2018) remain the go-to reference for “RL in critical infrastructure”.

OpenAI: InstructGPT (March 4, 2022) codified RLHF as the default alignment step in modern LLM pipelines.

Baidu: Active RL/autonomy research (e.g. RL for robotics and traffic signal control, Apollo RL platform papers), signaling ongoing investment on the China side of the market.

IBM / Intel / Salesforce: Ecosystem contributors (Intel’s Coach RL library, Salesforce research has released performance-minded RL tools historically).

Simulation products / RL Environment / RL-as-a-Service:

MathWorks (Simulink) and AnyLogic: Official connectors with Project Bonsai with enterprise-friendly entry points.

NVIDIA Isaac Sim: Physics-accurate sim for robot policy training and validation.

Unity ML-Agents, Ray RLlib (Anyscale), Gymnasium/Gym, TF-Agents: The open-source backbone for many RL stacks.

Companies like Applied Compute, Veris AI, Kaizen, Mechanize, and Osmosis are providing RL infra and services to let customers infuse RL into their products.

Robotics and logistics:

Ocado Group: As mentioned earlier, they acquired Kindred Systems ($262M) and Haddington Dynamics ($25M) in Nov 2020. Repeatedly calls out deep RL for picking.

Kindred Systems: Piece-picking. Past customers include Gap and American Eagle.

Haddington Dynamics: Low-cost dexterous arms. Acquired for $25M.

Covariant: Deployed with Radial in 2023. Strong 3PL fit.

Micropsi Industries: “MIRAI” product adapts to variability in tasks like cable assembly. RL-style learning under the hood.

OSARO: Picking and depalletizing software with learning-based control.

Vicarious: Acquired by Alphabet’s Intrinsic in 2022, pointing to consolidation of manipulation/learning talent.

Symbotic: Public warehouse-automation bellwether. $1.8B FY-2024 revenue and a Jan 16, 2025 deal to acquire Walmart’s robotics unit for $200M, paired with a $520M development program covering 400 APDs over time.

Healthcare and biotech:

BioNTech: Acquired InstaDeep (Jan 10, 2023) to bring advanced AI (including RL) in-house for discovery and operations.

Finance:

JPMorgan: LOXM execution agent (reported 2017), an early example of RL-style policy learning with controls in a high-stakes domain. Financial Times

Smaller companies:

BeChained (industrial energy optimization), Predictiva (trading), Telemus AI (RL training/eval tools), PLAIF (ROS-to-KEBA control demos), Surge AI (data labeling used in RLHF pipelines). Each points at niche opportunities in energy, finance, robotics control, and data operations.

Ecosystem names appearing as adopters/partners include Gap, American Eagle, Walmart, and Radial.

7. Go-to-market patterns, risks, and moats you can actually underwrite

The sim-first deployment loop is a moat. If your RL product depends on high-fidelity simulators and digital twins, integration depth with Simulink, AnyLogic, or Isaac Sim becomes a practical switching cost. Once a control policy is validated against a company’s “digital plant”, ripping it out is painful. And this is especially true if you’ve also instrumented observability, roll-back, and safety checkers around the policy.

Education channels are moving in-house. AWS DeepRacer seeded hundreds of thousands of learners, but the league’s retirement after 2024 and the shift to an AWS Solution in 2025 signals a new model: companies will run their own “leagues”, tie them to internal simulators and datasets, and keep IP in-house. Vendors who support that motion (private clouds, custom tracks, enterprise SSO) will win training budgets and later production work.

Data and alignment work is sticky. Because most LLM stacks now include RLHF (thanks to InstructGPT’s result), any vendor who supplies reliable feedback data (e.g. labeling platforms such as Surge AI) or dependable reward/eval tooling (e.g. verifiers) can become embedded in model-lifecycle operations. This is an emerging moat that doesn’t look like “classic SaaS”, but behaves like it in practice.

Consolidation is a feature, not a bug. Ocado’s purchases of Kindred and Haddington and Intrinsic’s acquisition of Vicarious show that large buyers prefer packaged stacks with talent attached. For startups that only provide specific tools, that means the exit path is often “get three logos, prove reliability, and get acquired”. If you want to swing for a long-run IPO, you need to grow out of pure-play offering and build a full stack offering.

Geography matters. Local compliance and support remains a gating factor for factory and logistics deployments. The center of gravity is multinational (US, UK, Germany, China), so startups that find the right regional system integration partners (or ride with Microsoft/AWS channel programs) will scale faster than pure-direct sellers.

8. How to connect the dots to infra startups (dependencies, correlations, and “gotchas”)

GPU supply and cluster managers → which RLHF framework wins. If your customer already runs Megatron for pretraining and SGLang/vLLM for serving, SLiME or ROLL will feel native. If they live in NeMo land, NeMo-RL wins by default. If they want total flexibility or untrusted nodes, prime-rl unlocks federated training. The point is that infra choices decide the RLHF tool before a modeler opens a notebook.

Simulator availability ↔ sales velocity. The fastest deployments happen where the buyer already maintains trusted simulators (Simulink for control, AnyLogic for operations, Isaac Sim for robots). If a prospect cannot simulate, your sales cycle includes a modeling project. Time-to-value stretches out and your gross margin takes a hit.

Data labeling and evaluation → sticky, recurring services. RLHF needs high-quality preference data and robust evaluations. That creates a repeat services layer (often billed on volume or seats) that compounds over time and raises switching costs. This is subtle, but powerful. The verifiers framework codifies evals. Data vendors like Surge AI are common in RLHF case studies.

Retail automation deals ripple through the stack. The Symbotic–Walmart expansion isn’t just a warehouse story. It pulls in upstream component vendors (arms, vision systems), software (WMS integration), and sometimes nearby last-mile tech (APDs). Startups supplying perception, grasp planning, or scheduling can ride these waves even if they aren’t the “prime” vendor.

Safety and audit features are not optional. Particularly in finance and heavy industry, buyers will demand logs, simulators for “what if” replays, and override circuits. LOXM’s early disclosures and the widespread use of guardrails in enterprise LLM deployments show that RL succeeds commercially when paired with simple, explainable controls.

9. Risks, surprises, and what to watch in the next 24 months

Sim-to-real gaps can bite. Even with good models, differences between simulation and reality can cause regressions. The mitigation is boring but effective: domain randomization, staged rollouts, and layered safety constraints. Vendors with proven simulator connectors (Simulink/AnyLogic/Isaac) and robust A/B failovers have an edge.

Vendor stability and consolidation risk. If your RL vendor gets acquired (e.g. Vicarious → Intrinsic in 2022) or pivots, your roadmap may change overnight. Large buyers like Ocado handle this by buying the capability outright. If you’re an investor, favor startups that integrate with the buyer’s existing simulators and control stack. This reduces “platform hostage” risk at renewal.

Education channels are moving away from centrally hosted showcases. With DeepRacer’s league ending after 2024, teams will need new ways to upskill engineers. That could slow top-of-funnel unless vendors provide simple, self-hosted training kits and enterprise competitions. The flip side: internal leagues may produce more deployable pilots because they’re built on company models and data from day one.

Regulatory and safety scrutiny. As RL touches physical systems and financial execution, expect more audit requirements. Startups that package policy introspection and “explainable controls” will find compliance less of a throttle.

Catalysts to watch near term:

Walmart–Symbotic APD deployments moving from design to rollout. Watch for first-site go-lives and backlog updates.

Deeper Simulink/AnyLogic integrations (connectors, templates) that shorten time-to-pilot for industrial buyers.

NeMo-RL/ROLL/SLiME performance wins on big clusters and better agent stacks (e.g. SkyRL, ART) proving stable tool use over long horizons.

Internal “leagues” at F500s replacing DeepRacer as a talent funnel.

Names you’ll keep encountering (complete coverage of earlier mentions):

Platforms/labs: Microsoft (Project Bonsai), AWS (SageMaker RL / DeepRacer), Google DeepMind, OpenAI, Baidu, IBM, Intel, Salesforce.

RL infra products: MathWorks (Simulink), AnyLogic, NVIDIA Isaac Sim, Unity ML-Agents, Ray RLlib (Anyscale), Gymnasium/Gym, TF-Agents, Intel Coach, Applied Compute, Veris AI, Kaizen, Mechanize, Osmosis.

Robotics/logistics: Ocado Group, Kindred Systems, Haddington Dynamics, Covariant, Radial, Micropsi Industries, OSARO, Vicarious (Intrinsic), Symbotic, plus adopters Gap, American Eagle, Walmart.

Healthcare/biotech: BioNTech, InstaDeep.

Finance: JPMorgan.

Startups/tools: BeChained, Predictiva, Telemus AI, PLAIF, Surge AI.

New RL/RLHF stacks: TRL, NVIDIA NeMo-RL, ByteDance/volcengine verl, Alibaba ROLL, Zhipu/THUDM SLiME, prime-rl, SkyRL, OpenPipe/ART, AI2 Open-Instruct, torchtune, willccbb/verifiers, agentica-project/rLLM.

If you’re tracking this sector for venture, the investable themes over the next two years are:

Sim-tied RL for operations where you can measure OPEX savings quickly (HVAC, calibration, scheduling).

Robot manipulation/picking stacks that demonstrate site-to-site generalization and clean WMS/ERP hooks.

RLHF infrastructure (data, eval, training frameworks) that meets infra teams where they are (Kubernetes/Slurm, Megatron, NeMo, vLLM/SGLang) and ships with the guardrails enterprises demand.

10. Why these pieces fit together

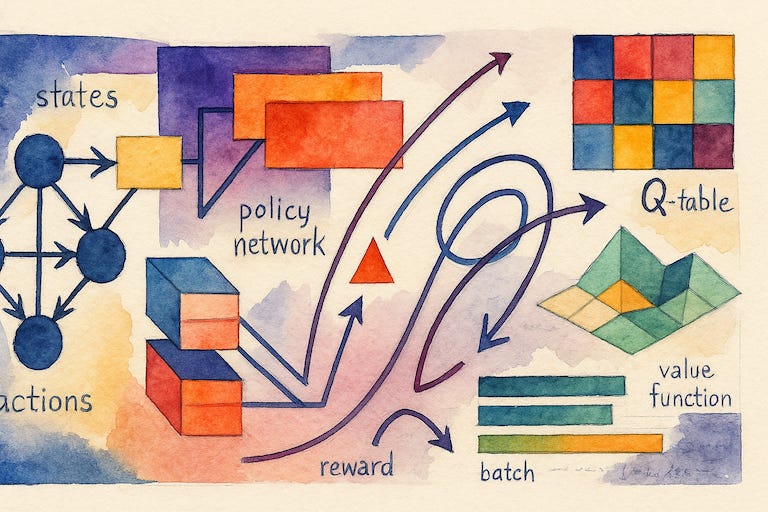

Think of RL as “learned control” rather than “AI magic”. In a factory or warehouse, you already have sensors and actuators.

The missing piece is a policy that maximizes a goal while avoiding bad states.

Policy is a set of choices about which action to take in each situation

Goal is something like energy savings, picks per hour, or calibration accuracy.

Bad state is something like overheating, collisions, or mis-picks.

What makes RL commercially usable now are three things:

Simulation first, deployment later. When you can train policies in a digital twin (Simulink, AnyLogic, Isaac Sim), you sidestep most of the risk. That’s why the Siemens + Bonsai story resonates: a domain expert could encode the task and use a platform to do the heavy lifting.

Tooling that meets infra where it lives. In LLM land, RLHF stacks like TRL, NeMo-RL, verl, ROLL, SLiME, and prime-rl now align with the way infra teams actually run workloads (on Kubernetes, Slurm, or tightly packed DGX pods). Many of these stacks come with sane defaults and recipes so teams can spend more time on what to optimize and less on how to wire up the training loop.

Proof that buyers will pay when outcomes are clear. Energy bills and pick-rates are easy to measure. A pilot that shows 30–40% energy savings or steady throughput uplift writes its own business case. That’s why DeepMind / Google, Ocado / Kindred, Covariant / Radial, and Symbotic / Walmart matter.

What this means for venture

Don’t fund research projects. Fund “boring excellence”. The winners aren’t the flashiest algorithms. They’re the teams who make deployments predictable and safe, with rock-solid simulator hooks and guardrails.

Back the “glue” layers. Evaluation suites (e.g. verifiers), high-quality preference data vendors, and integration connectors are under-invested and deeply sticky.

Assume consolidation. If a startup gets three industrial logos and shows solid uptime, it’s a candidate for acquisition (as Vicarious and Kindred/Haddington show). Invest with that outcome in mind.

Expect internal leagues to replace showcase programs. As DeepRacer transitions, enterprises will “own” their RL training funnels. That’s a place for startups to sell hosted competitions, simulator content, and analytics dashboards behind the firewall.

Closing thought

Reinforcement learning stopped being a lab toy the moment it started saving money in data centers and picking real items in warehouses. The next two years won’t be about one grand breakthrough. They’ll be about repeatable, simulator-backed deployments across factories and logistics, and RLHF stacks that make large models actually helpful. If you invest in the pieces that make those two motions boring and reliable, you’re investing where the value will quietly compound.

If you are getting value from this newsletter, consider subscribing for free and sharing it with 1 infra-curious friend: