RLenvironment.com - Tracking live signals from RL Repos

Built an agent + website to track 49 RL repos on Github and extract signals from them

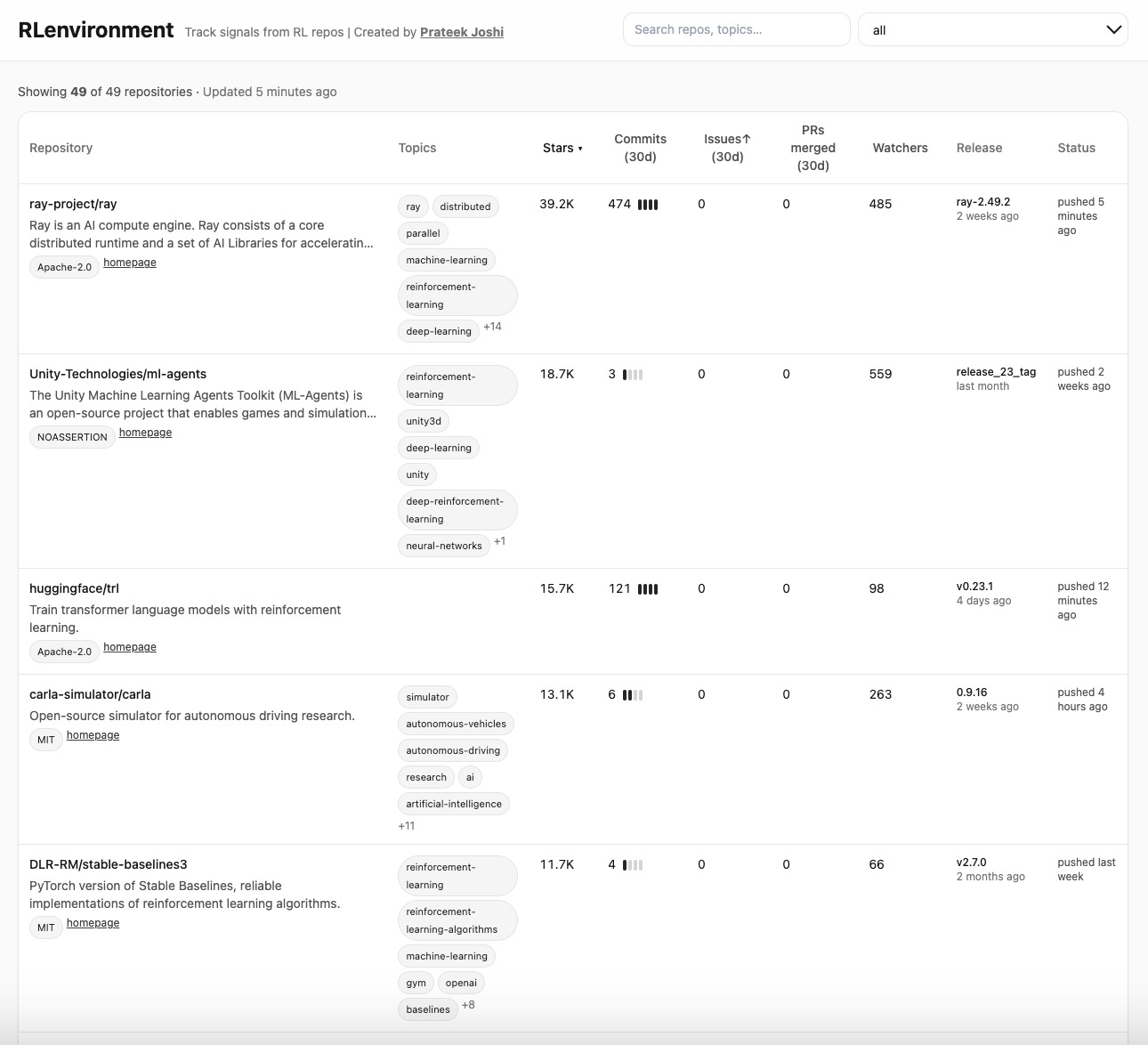

I built an agent to analyze 49 open source RL repos. And built RLenvironment.com to show the live signals. Check it out and let me know what you think. Here is the latest snapshot:

Portfolio-level signals

Scale and freshness: 240,832 total stars across 49 repos. 24 of them were active in the last 30 days (1392 commits), 10 recent releases (≤ 30d). 2 repos archived.

Power centers: Google/DeepMind, Farama, Nvidia Isaac, OpenDILab, Meta, HF anchor a big share of stars and recent commits.

Where the work is: Mix is Library/Algos > Environments/Simulators > Platforms/Runtimes. Multi-agent + robotics + offline-RL are well represented (some people would debate that offline-RL is not real RL, but that’s for another post).

Leaderboard: Scale and mindshare

By stars (top 10): ray-project/ray, Unity ML-Agents, HF/trl, CARLA, Stable-Baselines3, OpenAI Spinning Up, Google Dopamine, DeepMind MuJoCo, Farama Gymnasium, Tianshou. These are the “safe defaults” for integrations and community reach.

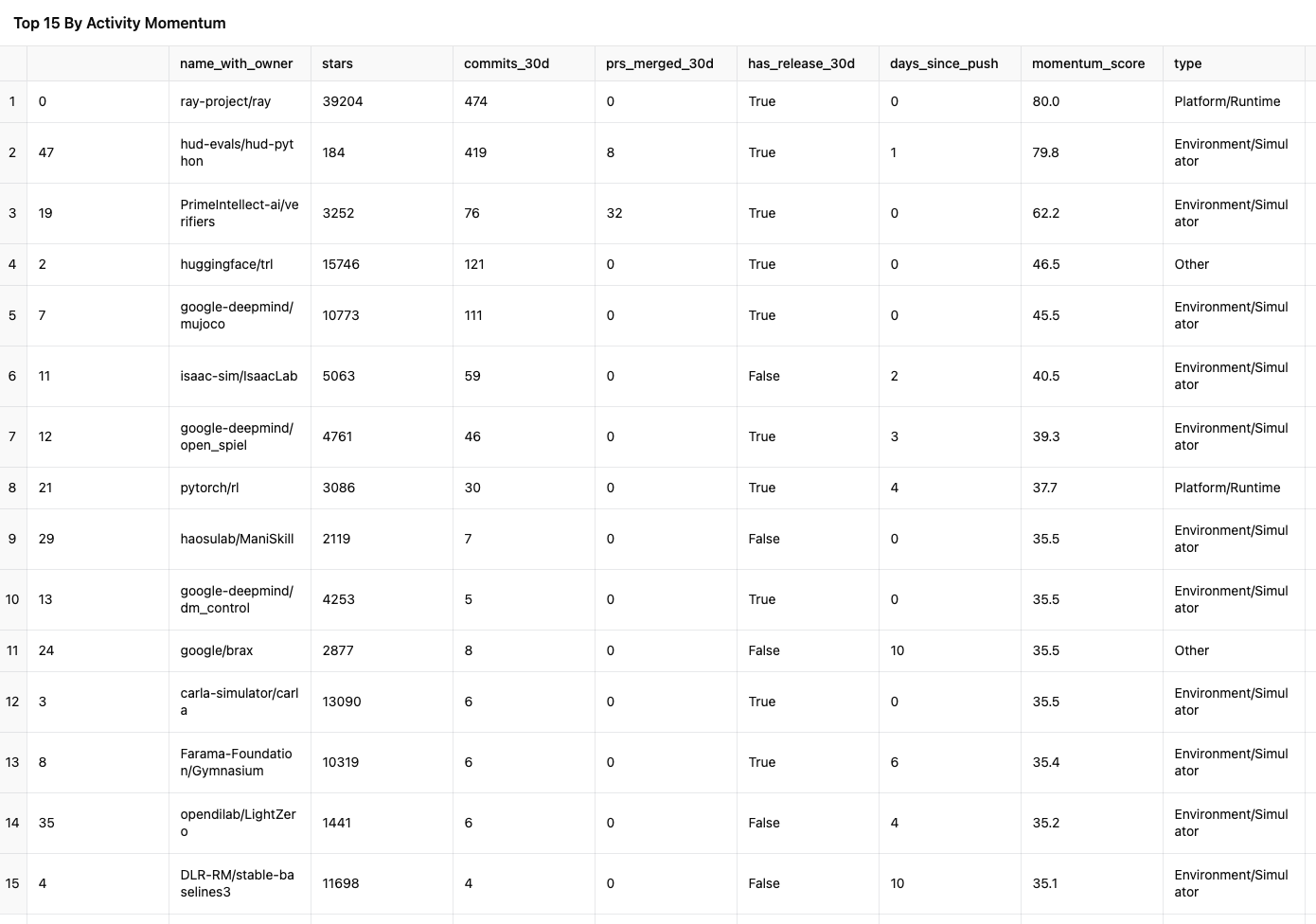

Momentum: Shipping velocity now

Hottest by activity score (commits_30d, PRs_merged_30d, push/release recency): strong showings from huggingface/trl, ray-project/ray, google-deepmind/mujoco, google-deepmind/open_spiel, isaac-sim/IsaacLab, pytorch/rl, PrimeIntellect-ai/verifiers. These are the best near-term partnership/signal taps.

Emerging movers (low stars, high momentum)

“Under-the-radar but shipping” (stars < 1k, momentum ≥ 70): e.g. hud-evals/hud-python (rapid cadence in LLM-RL eval/envs), instadeepai/Mava (JAX MARL), plus a few niche env/runtime repos. These are prime candidates for early collabs, grants, or feature pilots.

Areas heating up

LLM-RL environments and verifiers: PrimeIntellect-ai/verifiers, hud-evals/hud-python show fast iteration → good proxy for RLHF/RLVR ecosystem traction.

Physics and robotics: MuJoCo, IsaacLab, ManiSkill have active pipelines → strong for sim-to-real stories and embodied agents.

Core libraries: TRL, PyTorch RL, Tianshou, SB3 remain the practical workhorses for researchers/teams.

Risk and maintenance flags

Issue backlog hotspots: very large open-issue queues in ray-project/ray, carla-simulator/carla, facebookresearch/habitat-lab → watch for maintainer bandwidth + triage pace.

Staleness: a handful of well-known but stale >90d repos (some with big star counts) → fine for legacy baselines, not great for new dependencies.

Topic coverage: Where the field is leaning

Most frequent tags: reinforcement-learning, gym/gymnasium, robotics, multi-agent, MuJoCo, PPO/SAC/TD3, imitation/offline RL. Clear skew to embodied control + MARL + practical baselines.

If you like this newsletter, consider sharing it with 1 infra-curious friend: